Hyper-Object Challenge @ ICASSP 2026

Reconstructing Hyperspectral Cubes of Everyday Objects from Low-Cost Inputs

Learn More GithubAbout the Challenge

Hyperspectral imaging (HSI) captures fine-grained spectral information, enabling precise material analysis. However, commercial HSI cameras are expensive and bulky. In contrast, RGB cameras are affordable and ubiquitous.

The Hyper-Object Challenge aims to revolutionize access to spectral imaging by developing learning-based models that can reconstruct high-fidelity hyperspectral cubes (400-1000 nm) from low-cost inputs like mosaic sensor data or standard low-resolution RGB images. This would unlock applications from counterfeit detection to agricultural monitoring using everyday devices.

Diverse everyday objects from the Hyper-Object dataset.

Challenge Tracks

The competition comprises two distinct tracks, each defined by a different low-cost input format ik.

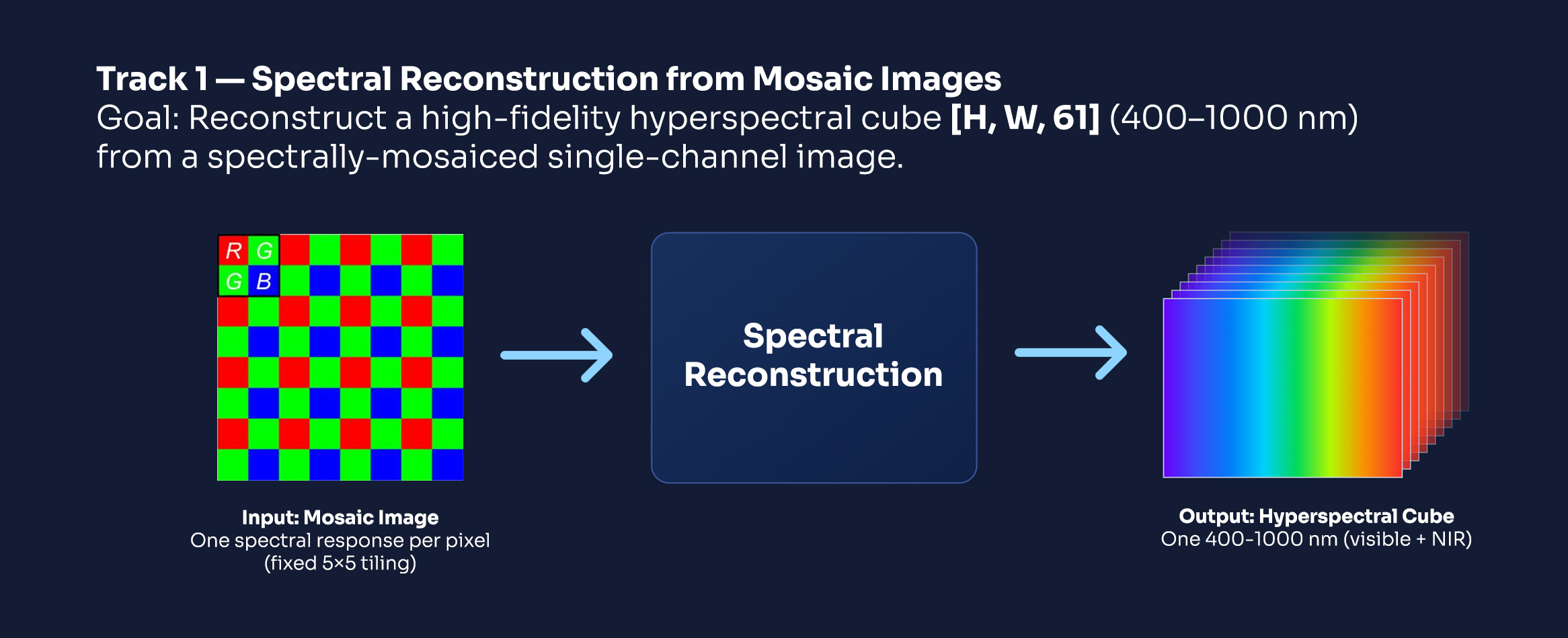

Track 1: Spectral Reconstruction from Mosaic Images

Each input ik is a mosaic image that captures only one spectral band per pixel.

Every pixel is filtered according to a predefined tiling pattern that mimics a snapshot spectral sensor. The task is to reconstruct the full spectral cube

Objective

Recover all C = 61 spectral bands (400 – 1000 nm, 10 nm spacing) from the spectrally-subsampled observation.

Evaluation

Submissions are ranked by Spectral-Spatial-Color (SSC) score, range in [0, 1].

- Spectral: SAM, SID, ERGAS

- Spatial: PSNR, SSIM on a standardized sRGB render (D65, CIE 1931 2°)

- Color: ΔE00 on the same sRGB render

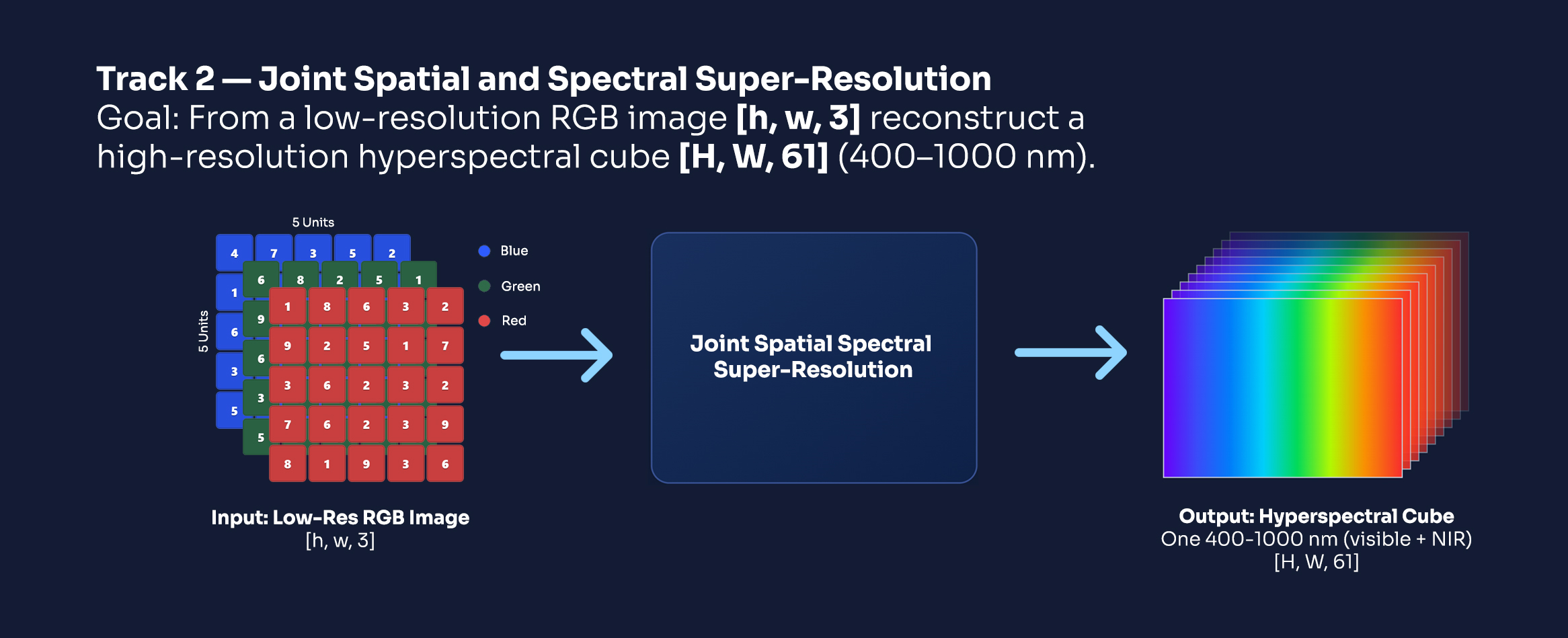

Track 2: Joint Spatial and Spectral Super-Resolution

Each input ik is a low-resolution RGB image captured by a commodity camera.

The goal is to jointly recover spatial and spectral resolution by reconstructing

where C ≫ 3 and H ≫ h, W ≫ w.

Objective

Produce a high-fidelity hyperspectral cube that restores full spatial and spectral resolution.

Evaluation

Submissions are ranked by Spectral-Spatial-Color (SSC) score, range in [0, 1].

- Spectral: SAM, SID, ERGAS

- Spatial: PSNR, SSIM on a standardized sRGB render (D65, CIE 1931 2°)

- Color: ΔE00 on the same sRGB render

Important Dates (Tentative)

Registration Opens

Public Testing Set & Baseline Release

Private Testing Set Release

Submission Closes

Winner Announcements

2-page Papers Due (by invitation)

Paper Acceptance Notification

Camera-ready Papers Due

-

Submissions will only be considered if accompanied by the full code.

Detailed instructions for code submission will be provided before the submission deadline. -

The top three winners from each track will be selected based on code evaluation on the hidden test set.

Participants must ensure that their code runs successfully on our end for the final evaluation. - Winners will be notified via email.

How to Participate

Access Resources

Get the training data, baseline models, and evaluation scripts on our Kaggle page.

Develop Your Model

Train your learning-based model to reconstruct high-fidelity hyperspectral cubes.

Submit & Compete

Submit your predictions on the held-out test set and climb the leaderboard.

Register to access test sets & submissions

Register on Google FormsOne submission per Kaggle team.

About the Test Sets

-

Public test set (with ground truth)For self-testing and local validation.

-

Private test set (without ground truth)Used for the Kaggle leaderboard.

-

Hidden test setFinal evaluation; distributed to registered teams only.

What you’ll receive after registration

-

“Hidden-private” test set

-

Submit your reconstructed cube

-

Submit your code & trained model

Organizers

Pai Chet Ng

Singapore Institute of Technology

Konstantinos N. Plataniotis

University of Toronto

Juwei Lu

University of Toronto

Gabriel Lee Jun Rong

Singapore Institute of Technology

Malcolm Low

Singapore Institute of Technology

Nikolaos Boulgouris

Brunel University

Thirimachos Bourlai

University of Georgia

Seyed Mohammad Sheikholeslami

University of Toronto

Frequently Asked Questions

What is the goal of the Hyper-Object Challenge?

The Hyper-Object Challenge pushes the frontier of low-cost hyperspectral imaging. Participants are invited to develop deep learning models that reconstruct high-fidelity hyperspectral image cubes (400–1000 nm, 61 bands) of real-world objects from cost-effective input formats, such as spectral mosaics or low-resolution RGB images. The goal is to democratize access to spectral imaging technology for real-world applications like agriculture, cultural heritage, forensics, and material analysis.

What are the competition tracks?

We offer two tracks, each focusing on a different practical constraint:

-

Track 1 – Spectral Reconstruction from Mosaic Images

Input: A single-channel spectral mosaic image (1 band per pixel)

Output: A full-resolution hyperspectral cube with 61 bands -

Track 2 – Joint Spatial & Spectral Super-Resolution

Input: A low-resolution RGB image (captured by a commodity camera)

Output: A high-resolution hyperspectral cube (61 bands, upsampled)

You may participate in one or both tracks.

What data is provided?

- Training data – Available now

- Public test data – Released on Aug 25, includes input and ground-truth for local validation

- Private test data – Input-only; used for leaderboard ranking

- Hidden test data – Used internally to evaluate your submitted model/code for final ranking

Please refer to the Data Description section for more details.

How is scoring done?

We use the Spectral-Spatial-Color (SSC) composite score as the main evaluation metric. It combines the following metrics:

- SAM (Spectral Angle Mapper)

- SID (Spectral Information Divergence)

- ERGAS

- PSNR

- SSIM

- ΔE (color difference in rendered RGB space)

Each metric is normalized and combined into a single SSC score in [0, 1], where higher is better.

What should my model output?

Each sample's output should be a 61-band hyperspectral image cube, matching the spatial resolution of the input (Track 1) or a defined high-res target (Track 2). Outputs must be saved in .npy format as *.npy files named after the test sample ID.

Can I use external datasets or pre-trained models?

Yes. External datasets, synthetic data, and pre-trained models are allowed, but must be clearly disclosed in your final write-up. Please do not use test set leakage or non-public data.

Note: The organizers and affiliated students will not participate in the competition.

What is the submission format?

- You must submit a

.zipfile containing one.npyfile per test sample, named exactly as per the sample ID (e.g.,sample123.npy). - The output of each

.npyfile must be a float32 H×W×61 array in the range [0, 1].

What is the leaderboard setup?

- The leaderboard reflects scores on the private test set.

- Your final ranking will be based on internal evaluation on the hidden test set using your submitted code.

- This ensures fair and robust evaluation under realistic deployment conditions.

How do I submit my model for final evaluation?

In addition to .zip file predictions, you must submit:

- Your trained model weights

- A standalone inference script (e.g.,

inference.py) that loads your model and generates output.npyfiles for any given input directory - A

requirements.txtlisting all dependencies

We will run your code in a standardized environment and evaluate on the hidden test set.

What is the submission limit?

- You may make up to 5 submissions per day

- A maximum of 3 final code submissions can be made for internal evaluation

- Your highest SSC score from those 3 code submissions will be used for the final ranking

What are the hardware or runtime constraints?

For the final evaluation, your model must be runnable on a single GPU (e.g., NVIDIA RTX 6000 Ada or similar) with <24GB VRAM and <30 minutes inference time per test sample. If your model exceeds this, you may be disqualified.

Where can I ask questions or get help?

Please post your questions on the Kaggle discussion forum. The organizers will actively respond and clarify rules and requirements.

What license applies to the data?

The dataset is released under the MIT License, which permits free use, modification, and distribution for both research and commercial purposes, provided that the original authors are properly credited.

What do the winners receive?

- Featured in a special session at ICASSP 2026

- Invited to contribute to the joint overview paper with the organizers

- Provided with certificates and digital badges